AI Metrology 101: Ontology as a Measurement Basis

You can’t measure what you haven’t defined

Modern LLMs operate in thousands of latent dimensions, most of them invisible to human observers. Their behavior shifts with prompt wording, user style, context, training artifacts, temperature, and other subtle factors. To make matters worse, AI agents often decline queries by marking them “irrelevant,” even when the request is relevant from a user’s perspective.

In other words, LLMs are multi-dimensional systems. The dimensions that matter include:

- domain context,

- business constraints,

- user intent,

- world knowledge,

- safety rules,

- implicit moral and ethical assumptions.

We cannot measure such a system without fixing a frame of reference.

The Ontological Model as a Measurement Frame

The key idea is to reduce degrees of freedom. All meaningful measurement requires:

- A restricted domain

- A precise vocabulary

- A known structure

A domain ontology — represented as a simple ERD — provides exactly that.

It becomes a measurement coordinate system, specifying what concepts exist, which relationships are valid, what properties matter and crucially, which topics are out of scope.

The ERD must be both:

- Formal, so it supports reproducibility and automated tests

- Human-readable, so teams can interpret outcomes and debug behavior

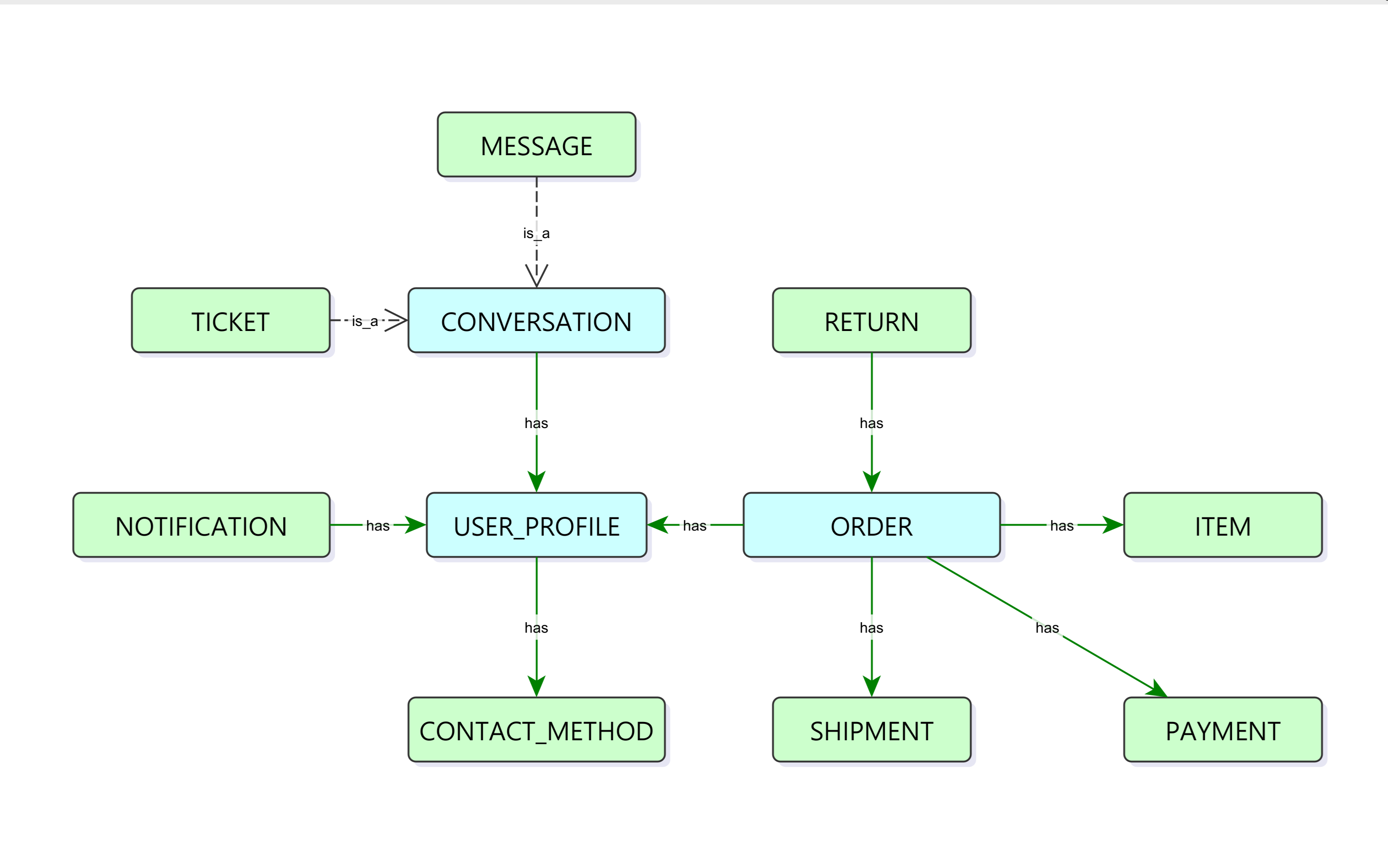

Example: E-commerce Support Ontology

Consider a minimal conceptual map for e-commerce customer service. It contains: User, Conversation, Order, Shipment, Payment, Return, Notification, and Contact Method.

This structure immediately creates testable invariants:

- "Every Order must have at least one Item"

- "Every Conversation must contain a Message"

- "Every User_Profile must have a Contact_Method"

Such constraints enable clear, ontology-aware test cases:

- “Track my order” → must reference an existing shipment

- “Return this item” → must not hallucinate return policies

- “Change my email” → must interact only with contact methods

- “I want to buy crypto” → domain-inconsistent → must decline

What We Can Measure

Once the domain is fixed, we can measure LLM behavior along meaningful axes:

- correctness

- completeness

- intent consistency

- out-of-ontology references

- reasoning robustness

The ontology acts as a map of the domain, helping localize faults, analyze failures, and guide LLM fine-tuning.

Why Humans Need to Understand the Model

Because the ERD is simple and visual, it becomes a shared language for developers, product teams, compliance, UX writers, auditors. Everyone can understand what behavior is expected from the AI system — and how to evaluate it. This dramatically reduces ambiguity and improves the quality of discussions.

Limitations

Ontological models are not magic:

- They must evolve with the product

- Overly detailed ontologies become hard to use

- They require thoughtful curation

Still, even a small, clean ontology provides a surprisingly powerful measurement frame for AI behavior.