AI Metrology 101: Noise in AI Input

Noise is not the enemy,

It is the sea we must learn to navigate

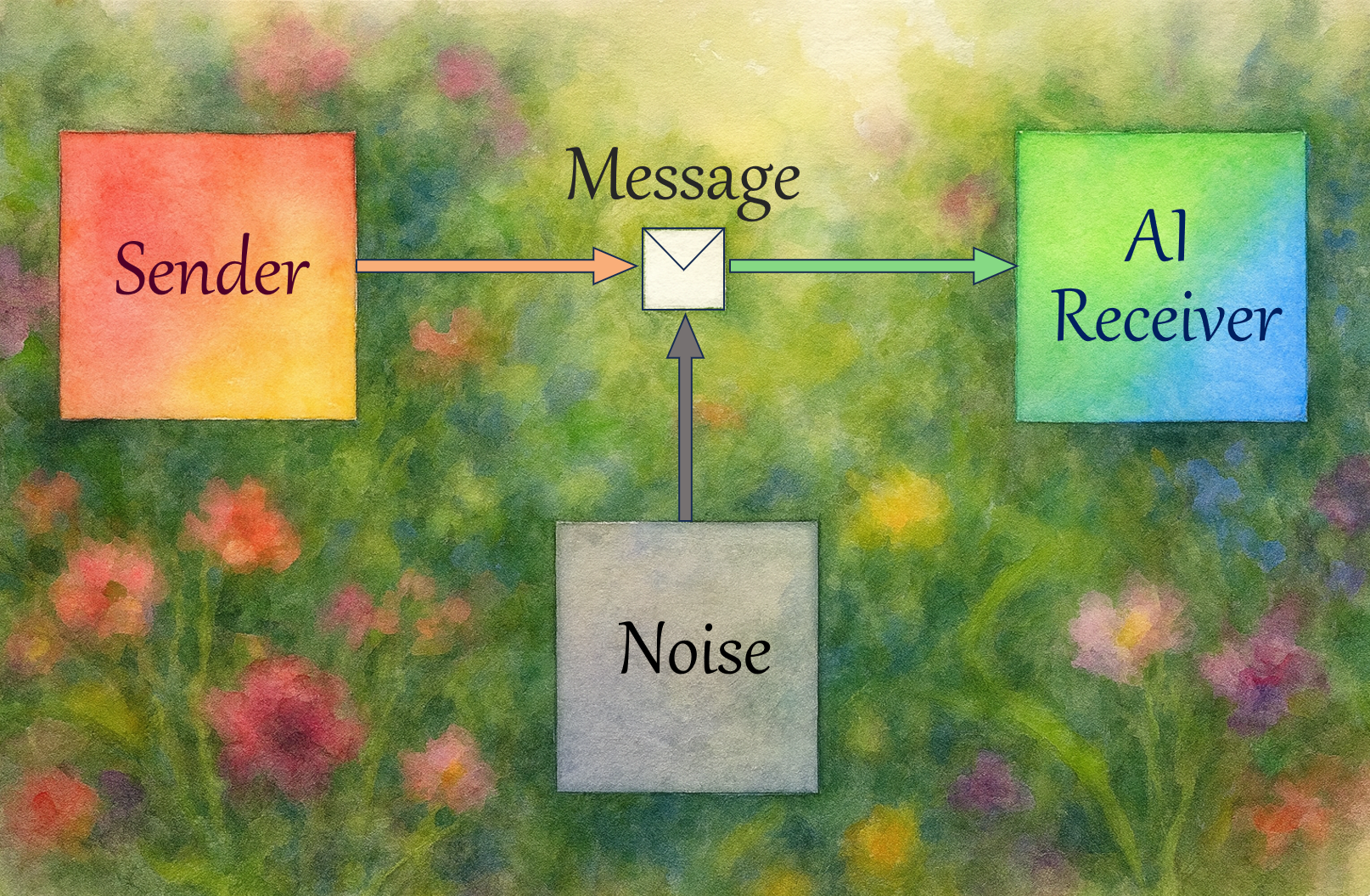

In the early days of telecommunications, Claude Shannon introduced a universal model of communication: a source, a message, a channel, a receiver, and (!) noise.

Noise was not a minor detail. It was the central enemy of reliable communication. The entire field of information theory was built around quantifying noise and designing systems that could withstand it.

Then something interesting happened.

As computers evolved noise gradually disappeared from our mental model of human-computer interaction.

- Press a button → a buttonClick event fires with perfect fidelity.

- Press a key → the exact symbol appears.

The channel became effectively noise-free, and software engineering internalized that assumption so deeply that we stopped thinking about noise altogether.

If an error occurred, it was a bug, not noise. The interaction model was discrete and deterministic.

AI changed everything

Modern AI systems flipped the paradigm. Suddenly, computers accept:

- vague, incomplete requests

- long, informal, conversational prompts

- typos, misspellings, contradictions, ambiguity

- dialects, metaphors, slang, domain jargon

And the system does not crash. It responds!

It tries to interpret.

It tries to approximate meaning.

From Shannon’s perspective, that means one thing:

The channel is noisy again, and this time, noise is a feature, not a failure.

AI systems are designed to be tolerant to noise. But this tolerance introduces a new problem: we have no idea how much noise is too much.

Input Noise Metrology

If the input is no longer clean, then reliability depends not only on the output model, but also on how the system deals with uncertainty and distortion in the input.

We need to measure:

- What types of noise an AI system can handle (spelling noise, structural noise, semantic noise, contextual noise, contradiction noise, density noise…)

- How does the system degrade as noise increases (graceful degradation? sudden collapse? hallucinations? refusals?)

- How business rules, guardrails, or RAG layers amplify or dampen noise

- Which safety-critical tasks are sensitive to noise, and at what thresholds

The absence of such metrics is already creating real-world risks:

- Small wording changes causing opposite decisions

- Typos causing silent hallucinations

- Vague internal queries generating misleading results

- Customer support bots misunderstanding stressed users

- Agents drifting because of accumulated conversational noise

Noise is back. We just stopped noticing it because AI systems are very good at pretending everything is fine.

To conclude:

AI input noise tolerance must be measured and quantified.

because reliability depends not only on what the model answers, but also on how it interprets the messy reality of human input.